Introduction¶

Physician reviews are a crucial source of information for patients researching healthcare providers online. However, the vast number of reviews available across multiple platforms can be overwhelming. Allowing patients to more easily surface relevant information quickly would offer a much better search experience.

A prior analysis of three million physician reviews revealed that their content is generally constrained to six predominant themes:

- Bedside manner: Refers to the physician's tone and demeanor during visits.

- Communication: The physician's clarity and effectiveness in communication.

- Quality of care: The patient's perception of the quality of care they received.

- Office staff: The support staff managing patient intake and appointment scheduling.

- Quality time: The patient's perception that the visit was valuable and worthwhile.

- Wait time: The time between patient arrival and the start of the visit.

Approximately 95% of all reviews reference at least one of these themes, with "quality of care" being the most prevalent (present in 80% of reviews). Over 62% of reviews contain more than one theme, with one or two being the most common.

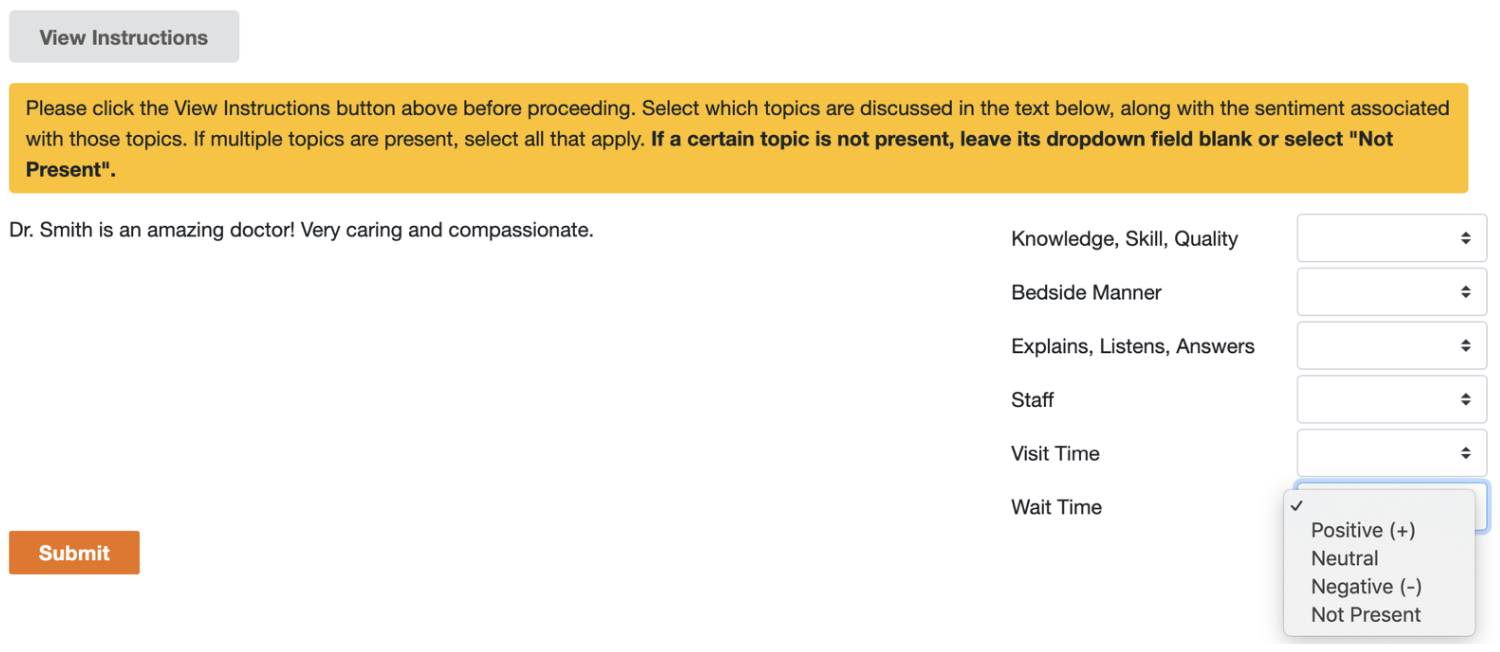

A classification model will be built to identify these themes in new reviews, helping to extract and surface the information most crucial to patients. However, to build such a model, labeled training data are required. To expedite the process, data were labeled using Amazon's crowd-sourcing platform, Mechanical Turk. Each review was evaluated by three participants, who assigned up to six theme labels (see figure below). A label was finalized only when two or more participants agreed.

Let's get started by importing some useful libraries and reading in the data.

%config InlineBackend.figure_format = 'retina'

from sklearn.model_selection import train_test_split

from sklearn.metrics import average_precision_score

from sklearn.metrics import precision_recall_curve

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.metrics import roc_auc_score

from sklearn.metrics import roc_curve

from nltk.corpus import stopwords

from gensim.models import Word2Vec

import gensim.downloader

from matplotlib.pyplot import *

import pandas as pd

import numpy as np

pd.set_option('display.max_columns', 500)

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.layers import Dense, Embedding

from tensorflow.keras.layers import GlobalMaxPooling1D

from tensorflow.keras.layers import SpatialDropout1D

from tensorflow.keras.layers import Bidirectional, GRU

from tensorflow.keras.metrics import Precision, Recall, AUC

from tensorflow.keras.models import Sequential

We can start by defining a function to remove punctuation and special characters from the text. This will serve to standardize the reviews and will improve model performance later on.

def process_text(text):

result = text.lower().strip()

result = re.sub(r'\n', ' ', result)

result = re.sub(r'\t', ' ', result)

result = re.sub(r'\r', ' ', result)

result = re.sub(r'[^\w\s\']', ' ', result)

result = re.sub(r'[^\x00-\x7F]+', ' ', result)

result = re.sub(r' +', ' ', result).strip()

return result

The data reside in eight separate csv files. Let's read each of them in and redefine some column names.

file_id_list = ['3741148', '3741760', '3742390', '3743847', '3745116', '3746169', '3746609', '3749005']

df = pd.DataFrame()

for i in file_id_list:

df = pd.concat([df, pd.read_csv(f'data/batch_{i}_results.csv')], axis=0)

df.columns = [i.split('Input.')[-1].split('Answer.')[-1].lower() for i in df.columns]

df = df[df['assignmentstatus'] != 'Rejected'].sort_values('hitid', ascending=False).reset_index(drop=True)

There were a few cases where only two Mechanical Turk participants labeled a particular review. These will be discarded from the analysis.

discard_reviews = df.groupby('hitid')['hitid'].count()[lambda x: x < 3].index.tolist()

df = df[~df['hitid'].isin(discard_reviews)].reset_index(drop=True)

Define the Target Columns¶

target_columns = ['bedside_manner', 'communication', 'quality_of_care', 'office_staff', 'quality_time', 'wait_time']

df[target_columns] = df[target_columns].replace({-1: 1, 0: 1})

df[target_columns] = df[target_columns].replace({-2: 0, 2: 0})

tmp = df.groupby('usersurveyid')[target_columns].sum().reset_index()

tmp[target_columns] = tmp[target_columns].replace({1: 0, 2: 1, 3: 1})

data = df[['usersurveyid', 'text']].merge(tmp, on='usersurveyid', how='inner').drop_duplicates('usersurveyid').reset_index(drop=True)

data[target_columns].apply(lambda x: x.value_counts())

| bedside_manner | communication | quality_of_care | office_staff | quality_time | wait_time | |

|---|---|---|---|---|---|---|

| 0 | 13622 | 15804 | 4596 | 19068 | 23364 | 23357 |

| 1 | 10893 | 8711 | 19919 | 5447 | 1151 | 1158 |

The data are very imbalanced across several of the targets. For example only about 4.5% of all reviews touch on the "quality time" or "wait time" themes.

Let's view the first few rows of the data.

data.head()

| usersurveyid | text | bedside_manner | communication | quality_of_care | office_staff | quality_time | wait_time | |

|---|---|---|---|---|---|---|---|---|

| 0 | 16662319 | Love her. Griffin has been seeing her since h... | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 16671318 | He is an amazing, reliable and intelligent phy... | 1 | 0 | 1 | 0 | 0 | 0 |

| 2 | 16670938 | Melinda is an AMAZING therapist! She is caring... | 1 | 0 | 1 | 0 | 0 | 0 |

| 3 | 16673989 | Nice dentist that really cares about you as a ... | 1 | 0 | 1 | 0 | 0 | 0 |

| 4 | 16556026 | Dr. Kury Perez is very nice and friendly. She ... | 1 | 1 | 0 | 0 | 0 | 0 |

Below are two example reviews - the first has no assigned themes while the second has two ("bedside manner" and "communication").

print(data.iloc[0]['text'])

Love her. Griffin has been seeing her since he was little

print(data.iloc[4]['text'])

Dr. Kury Perez is very nice and friendly. She knows how to explain your health concerns in a simple way. I highly recommend her as a good healthcare provider.

Prepare the Text¶

Let's now apply the pre-processing function to clean the text.

data = data.dropna(subset=['text']).reset_index(drop=True)

data['processed_text'] = data['text'].apply(process_text)

The data are split into training, validation, and test sets with approximately 75% of the data going to the training set.

x_tr, x_te, y_tr, y_te = train_test_split(data['processed_text'], data[target_columns], test_size=3000, random_state=0)

x_tr, x_va, y_tr, y_va = train_test_split(x_tr, y_tr, test_size=3000, random_state=0)

Build the Model¶

A neural network will be built which incorporates pre-trained word vectors from a popular model called Word2Vec. Word2Vec maps words to a dense vector space such that synonyms are found near each other. The variables below allow us to specify how many tokens to use from each review to classify them (maxlen), the total number of tokens in the model vocabulary (max_features), and the length of the Word2Vec embedding vectors (embedding_size). We'll tokenize the reviews and pad them to the same length.

maxlen = 100

max_features = 20000

embedding_size = 300

tokenizer = Tokenizer(num_words=max_features)

tokenizer.fit_on_texts(x_tr)

x_tr = tokenizer.texts_to_sequences(x_tr)

x_te = tokenizer.texts_to_sequences(x_te)

x_va = tokenizer.texts_to_sequences(x_va)

x_tr = pad_sequences(x_tr, maxlen=maxlen)

x_te = pad_sequences(x_te, maxlen=maxlen)

x_va = pad_sequences(x_va, maxlen=maxlen)

The gensim package has a nice way to download the necessary word vectors. In addition to Word2Vec, GloVe and fasttext word embeddings are also available.

[i for i in gensim.downloader.info()['models'].keys()]

['fasttext-wiki-news-subwords-300', 'conceptnet-numberbatch-17-06-300', 'word2vec-ruscorpora-300', 'word2vec-google-news-300', 'glove-wiki-gigaword-50', 'glove-wiki-gigaword-100', 'glove-wiki-gigaword-200', 'glove-wiki-gigaword-300', 'glove-twitter-25', 'glove-twitter-50', 'glove-twitter-100', 'glove-twitter-200', '__testing_word2vec-matrix-synopsis']

Each word in the review vocabulary can now be embedded.

embedding_index = gensim.downloader.load('word2vec-google-news-300')

word_index = tokenizer.word_index

num_words = min(max_features, len(word_index)) + 1

embedding_matrix = np.zeros((num_words, embedding_size))

for word, i in word_index.items():

if i >= max_features:

break

try:

embedding_matrix[i] = embedding_index[word]

except:

pass

Let's look at the first few embedding values for "doctor".

print(embedding_index['doctor'][:10])

[-0.09326172 0.02734375 0.07958984 -0.01287842 -0.11181641 0.3359375 -0.13867188 -0.12011719 0.02685547 -0.20410156]

The model will be a bidirectional recurrent neural network built using Keras. The Adam optimizer will be used with binary crossentropy as the loss. The area under the receiver operating characteristic and precision-recall curves will be printed after each epoch. Prior experimentation suggests three epochs is sufficient to optimize model performance.

model = Sequential([

Embedding(num_words, embedding_size, weights=[embedding_matrix]),

SpatialDropout1D(0.1),

Bidirectional(GRU(80, return_sequences=True)),

GlobalMaxPooling1D(),

Dense(6, activation='sigmoid')

])

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=[AUC(curve='ROC'), AUC(curve='PR')])

history = model.fit(x_tr, y_tr, batch_size=128, epochs=3, validation_data=(x_va, y_va))

Epoch 1/3 145/145 [==============================] - 43s 275ms/step - loss: 0.3532 - auc_2: 0.9109 - auc_3: 0.8399 - val_loss: 0.2552 - val_auc_2: 0.9534 - val_auc_3: 0.9070 Epoch 2/3 145/145 [==============================] - 41s 281ms/step - loss: 0.2277 - auc_2: 0.9641 - auc_3: 0.9260 - val_loss: 0.2292 - val_auc_2: 0.9623 - val_auc_3: 0.9202 Epoch 3/3 145/145 [==============================] - 41s 284ms/step - loss: 0.2007 - auc_2: 0.9719 - auc_3: 0.9402 - val_loss: 0.2313 - val_auc_2: 0.9621 - val_auc_3: 0.9187

The callback suggests that the model produces an ROC AUC of about 0.92 averaged across the six classes. Let's apply the model to our test set and see how we did.

predict = model.predict(x_va)

94/94 [==============================] - 2s 17ms/step

print(classification_report(y_va, predict.round(), zero_division=0))

precision recall f1-score support

0 0.79 0.84 0.81 1319

1 0.82 0.86 0.84 1037

2 0.85 0.97 0.90 2442

3 0.93 0.89 0.91 655

4 0.61 0.14 0.23 144

5 0.79 0.56 0.66 137

micro avg 0.83 0.88 0.86 5734

macro avg 0.80 0.71 0.72 5734

weighted avg 0.83 0.88 0.85 5734

samples avg 0.83 0.86 0.83 5734

fig, axs = subplots(2, 3, figsize=(12, 7))

fig.subplots_adjust(wspace=0.2, hspace=0.3)

for i, ax in enumerate(fig.axes):

fpr, tpr, threshold = roc_curve(y_va.iloc[:, i], predict[:, i])

score = roc_auc_score(y_va.iloc[:, i], predict[:, i])

ax.plot(fpr, tpr, lw=1)

ax.plot([0, 1], [0, 1], '--k', lw=1)

ax.minorticks_on()

ax.set_ylim(0, 1)

ax.set_xlim(0, 1)

ax.set_ylabel('True Positive Rate', fontsize=8)

ax.set_xlabel('False Positive Rate', fontsize=8)

ax.tick_params(axis='both', labelsize=8)

ax.set_title(f'{target_columns[i]}: {score.round(3)}', fontsize=8)

The model reaches an average ROC AUC score of 0.92 across the six themes. Of all the themes, the model has the hardest time understanding when a review references the quality of care received. This is also reflected in the labels given by the Mechanical Turk participants, likely because of the many ways in which the theme can be described. For example, the physician's knowledge or patient recovery time, could pertain to quality of care, and there may be overlap between this theme and "bedside manner" or "communication". Perhaps it would be helpful to break this theme into multiple ones or to combine themes.